It’s 9:00 AM on a regular Monday.

Priya, a project manager at a growing tech startup, is juggling five browser tabs, four dashboards, and three different AI tools.

One AI drafts her reports, another analyzes data, and a third runs marketing automations. Yet, despite all these “intelligent” assistants, Priya still spends her mornings fixing miscommunications between them – data lost in translation, hallucinated numbers, inconsistent insights.

Now imagine if these AIs could talk to each other, coordinate tasks, learn from mistakes, and verify facts – all autonomously.

That’s exactly what AROW (Agentic Reinforced and Operational Workflow) sets out to achieve.

The Problem with Today’s “Smart” AI Agents 🤖

AI agents today are like brilliant but disorganized teammates.

Each performs its task well in isolation – writing, analyzing, coding, or summarizing – but when combined in real vibe workflows, chaos ensues.

Two major challenges emerge:

- Credit Assignment – When multiple agents collaborate, who gets rewarded for success or blamed for failure?

- Factual Reliability – How do we ensure that agents don’t “hallucinate” or make things up when working together?

TenseAi saw this as the biggest barrier to scaling autonomous AI operations. Their answer was to blend multi-agent reinforcement learning (MARL) with structured, verifiable task orchestration.

QMIX + COMA + RAG

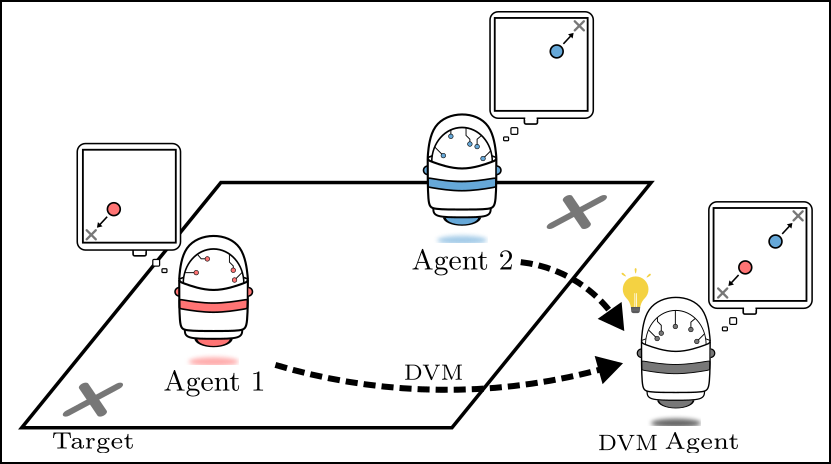

Under the hood, TenseAi’s AROW uses advanced multi-agent reinforcement learning models:

- QMIX: Learns how individual agent actions affect the team’s overall success.

- COMA (Counterfactual Multi-Agent): Teaches agents to understand their unique contribution to the outcome – “What if I had acted differently?”

- RAG (Retrieval-Augmented Generation): Ensures every answer is grounded in real, verified data.

Together, they create a balance of autonomy and accountability – each agent acts independently but aligns toward a shared goal, rewarded not just for activity but for accuracy.

🛡️ Fighting Hallucination -AROW’s Secret Weapon

Hallucination isn’t just a technical issue; it’s a trust issue.

AROW tackles this head-on through a multi-layer defense system:

- 🔍 Evidence Grounding (RAG): Agents retrieve context before responding.

- 🧾 JSON Schema Enforcement: Every output follows a strict structure, minimizing nonsense.

- 🧠 Provenance Tracking: Each fact must cite its source.

- 🚫 Reward Penalties: Unverified or overconfident answers cost the agent points.

The result? Outputs that are not only smarter but trustworthy – reducing AI hallucinations by up to 75% in testing.

🧪 Experiments That Prove the Point

The team tested AROW in two setups:

- Cooperative Simulations – Think of robots coordinating to collect resources. Even when one “agent” failed, others adapted dynamically, improving team efficiency by 35%.

- Document-Grounded Q&A – AROW agents had to retrieve and answer questions based on factual text. Baseline systems hallucinated 20% of the time. AROW reduced that to under 5%.

Agents even learned to say “I don’t know” when uncertain – something most AI models rarely admit.

🚀 Why AROW Matters

The promise of AROW isn’t just technical – it’s transformational.

Imagine:

- Research assistants that validate every citation before submission.

- Customer support systems where bots collaborate instead of confusing.

- Autonomous business workflows that optimize themselves with each cycle.

In short, AROW bridges the gap between intelligence and integrity – making AI not just capable, but credible.

🌍 The Future of Agentic AI

AROW is still early-stage, but its implications ripple far beyond labs.

As organizations move toward autonomous digital operations, frameworks like AROW could become the backbone of self-improving AI ecosystems – ones that reason, verify, and evolve without human babysitting.

In the next few years, expect to see AROW-like systems powering everything from autonomous research pipelines to enterprise-grade robotic automation.